Reinforcement learning from human feedback (RLHF) has received a lot of attention in recent years and has been the subject of much research and development. Also, I see several tweets in the past several days indicating that 9 out of 10 researches in Artificial Intelligence are directed toward RLHF and make this subject hyped in media. It is also important to recognize that RLHF is just one of many approaches to training machine learning systems, and it may not be the best choice for every task or situation.

So, what is Reinforcement Learning from Human Feedback?

Reinforcement learning (RL) is a type of machine learning in which an agent learns to interact with its environment in order to maximize a reward signal. In RL from human feedback, the agent receives feedback in the form of rewards or punishments from a human teacher or supervisor, rather than from the environment itself.

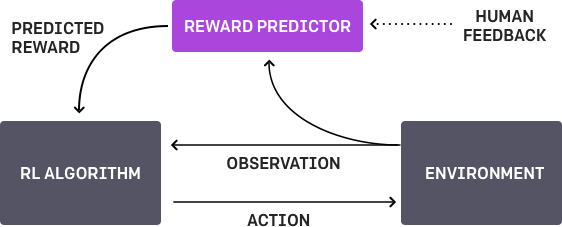

Reinforcement learning from human feedback (RLHF) image by OpenAI

RL from human feedback can be used to teach an agent how to perform a specific task, such as playing a video game or navigating a robot through a maze. The agent learns through trial and error, trying different actions and receiving feedback from the human teacher on its performance. The feedback can be in the form of a numerical reward or punishment, or it can be more abstract, such as verbal praise or criticism.

RL from human feedback can be useful in situations where it is difficult or impractical to define a precise reward function for the agent to optimize. It can also be useful for tasks that require a high degree of flexibility or adaptability, since the human teacher can provide guidance and adjust the learning process as needed.

Is it as promising as it sounds?

Reinforcement learning from human feedback (RLHF) can be a promising approach to training machine learning systems, particularly in situations where it is difficult or impractical to define a precise reward function for the agent to optimize. RLHF can allow the human teacher to provide more nuanced and context-specific guidance to the agent, which can be particularly useful for tasks that require a high degree of flexibility or adaptability.

However, there are also some limitations and challenges to using RLHF. One challenge is the need for a human teacher to provide consistent and reliable feedback, which can be time-consuming and may require significant training and expertise. Additionally, RLHF can be more susceptible to biases and subjective judgment on the part of the human teacher, which can affect the agent’s learning process.

Which methods are usually used in conjunction with RLHF?

There are several methods that can be used in conjunction with reinforcement learning from human feedback (RLHF) to improve the effectiveness and efficiency of the learning process.

Some examples include:

Imitation learning: This is a type of supervised learning in which an agent learns to perform a task by observing and copying the actions of a human expert. Imitation learning can be used to quickly bootstrap the learning process and provide a strong baseline for the agent to build upon. To use imitation learning with RLHF, the human teacher can first demonstrate the task to the agent, and the agent can then learn to perform the task by observing and copying the actions of the human expert. The human teacher can provide additional feedback and guidance as needed to help the agent learn more effectively.

Self-supervised learning: This is a type of unsupervised learning in which an agent learns to perform a task using only the inherent structure and regularities in the environment. Self-supervised learning can be used to help the agent learn more efficiently and effectively, particularly when the human feedback is sparse or noisy. To use self-supervised learning with RLHF, the agent can be trained to learn from the inherent structure and regularities in the environment, such as by predicting the next frame in a video or the next word in a sentence. The human teacher can then provide feedback on the agent’s performance and adjust the learning process as needed.

Transfer learning: This is a machine learning technique that allows an agent to use its knowledge and experience from one task to learn more quickly and effectively on a new task. Transfer learning can be used to help the agent generalize its learning to new situations and environments, reducing the need for human feedback. To use transfer learning with RLHF, the agent can be pre-trained on a related task or set of tasks, and then fine-tuned on the target task using RLHF. This can allow the agent to learn more quickly and effectively on the target task, since it has already learned some of the relevant skills and knowledge from the pre-training stage.

Ensemble methods: These are techniques that combine the predictions or actions of multiple models to produce a more accurate or robust result. Ensemble methods can be used to combine the predictions or actions of multiple RLHF models to improve their performance. To use ensemble methods with RLHF, multiple RLHF models can be trained on the same task, and their predictions or actions can be combined to produce a more accurate or robust result. The human teacher can provide feedback on the performance of the ensemble as a whole, rather than on each individual model.

So, what’s the conclusion?

In conclusion, RLHF is a promising approach to training machine learning systems, particularly in situations where it is difficult or impractical to define a precise reward function for the agent to optimize. RLHF can allow the human teacher to provide more nuanced and context-specific guidance to the agent, which can be particularly useful for tasks that require a high degree of flexibility or adaptability.

However, RLHF also has its limitations:

- RLHF requires a human teacher to provide consistent and reliable feedback, which can be time-consuming and may require significant training and expertise. This can be a limitation in situations where human resources are scarce or where the feedback process is too complex or expensive.

- RLHF can be more susceptible to biases and subjective judgment on the part of the human teacher, which can affect the agent’s learning process. This can be a concern in situations where fairness or objectivity is important.

- RLHF may not be the most efficient or effective approach for certain tasks, particularly those that can be easily defined using a precise reward function or that require a high degree of specialization or fine-tuning.

- RLHF may not be well-suited for tasks that require a high degree of robustness or generalization, since the feedback provided by the human teacher may not be comprehensive or representative of all possible situations.

Therefore, it is important to carefully consider the strengths and limitations of RLHF and to use it in conjunction with other methods as appropriate.

Leave your comment